The following content is purely for educational and entertainment purposes. It does not reflect personal political opinions or beliefs.

Today, I’m diving into a wild AI experiment: generating speeches that sound like Donald Trump that have never been seen before using GPT-2 and some cutting-edge techniques called LoRA and PEFT. But before we get into that, let’s hear from AI Trump himself:

“I can’t believe it. And I’m going to tell you, this is the most important election in the history of our country. I just want to thank you very much. This election is a choice between two extremes: extreme left and mainstream. The left is running to destroy America, destroy the American dream, and destroy our freedom. Democrats are running for president because of the fact that they’ve taken over the world for many, many years.”

Pretty convincing, right? But how did we get here? Let me take you through the journey. And by the way, all the code for this project is here for you to refer to and build on top of. https://github.com/Lumos-Academy/text-generator/blob/main/gpt2.ipynb

First, I experimented with traditional recurrent neural networks: RNNs, LSTMs, and GRUs. These models are great for sequential data like text, but they had some… interesting results. Here’s the best output I got from a GRU model:

“and if we don’t get the deal we want. we don’t want them. we want to make a deal. and then they say, “what do you think of this? he does anything about it. i don’t know what’s going to happen. i mean, they said, “we have the greatest political establishment that i’ve ever seen once the most important election in the united states. we stand with the incredible heroes of law enforcement. we believe in the dignity of work and the sanctity of life.”

Not quite Trump, is it? It’s picking up on some patterns, but it’s mostly word salad, though it’d be better if I trained it for longer. But, that’s why I moved on to more advanced models.

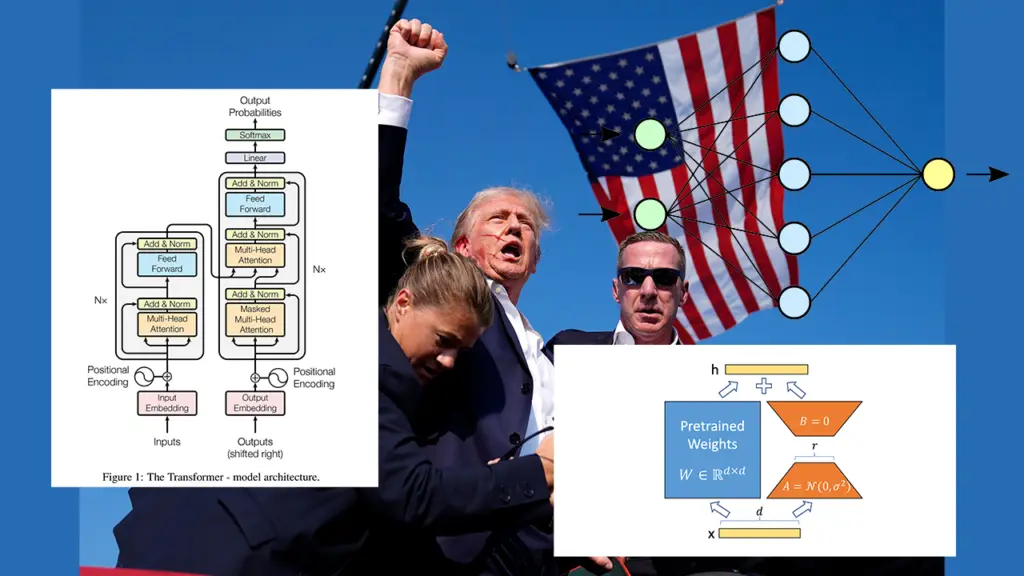

I moved to GPT-2, a pre-trained language model based on the Transformer architecture, we’ve all heard of ChatGPT right? GPT2 was released in 2019 and was a predecessor to ChatGPT which utilized GPT3. And here’s where it gets interesting: instead of retraining the entire model, which would require immense computational resources, I employed Parameter-Efficient Fine-Tuning (PEFT) with Low-Rank Adaptation (LoRA).

PEFT is a set of techniques designed to fine-tune large language models more efficiently. Traditional fine-tuning updates all parameters of a pre-trained model, which can lead to catastrophic forgetting and requires significant memory. PEFT methods, on the other hand, only update a small subset of parameters or introduce a small number of new parameters.

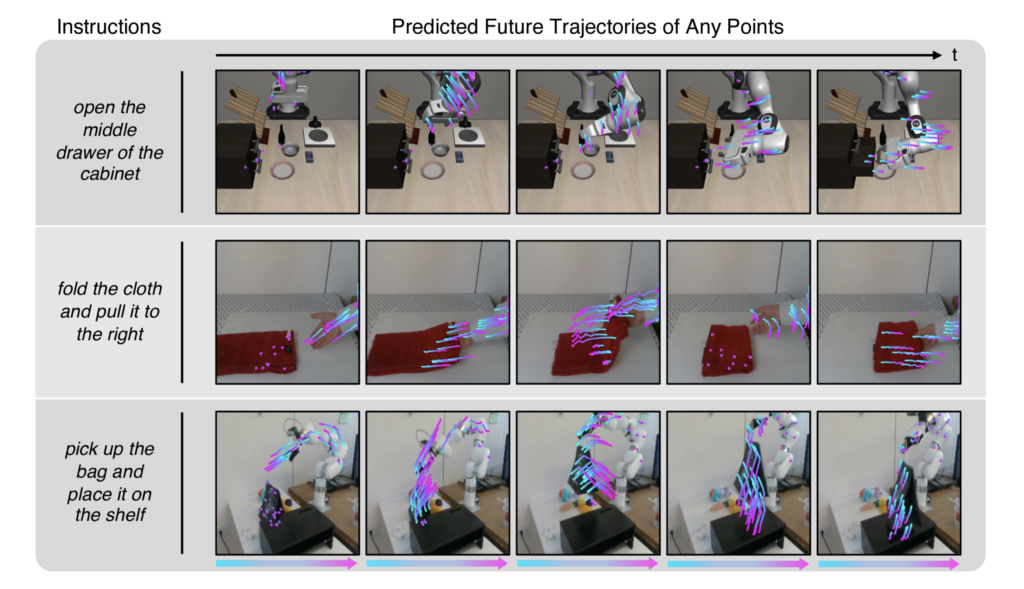

LoRA, specifically, adds pairs of rank-decomposition matrices to existing weights. These matrices have a significantly smaller parameter count than the original model weights. During training, only these additional matrices are updated, keeping the pre-trained weights frozen.

By using LoRA, we’re essentially adding a small, trainable “Trump filter” on top of GPT-2’s vast knowledge, allowing it to adapt to Trump’s unique speaking style without losing its general language understanding.

Now, let’s look at how the model progressed during training. But first, I want to show you why I moved from simple RNNs to more advanced models. Here’s what my RNN output looked like after 10 epochs:

“the people of the united states and incredible people of the united states and incredible people of the united states and incredible people of the united states and incredible people of the united states and incredible people of the united states and incredible people of the united states and incredible people of the united states and incredible people of the united states and incredible people of the united states and incredible people of the united states and incredible people of the united s”

Yikes, right? It’s a broken record! This is a common problem with simple RNNs – they can get stuck in repetitive loops. To fix this, we need to introduce some randomness into the generation process. That’s where the concept of “temperature” comes in.

In AI text generation, temperature is like a creativity dial usually between 0 and 1. Low temperature makes the AI play it safe and always choose the most likely next word in the probability distribution of potential next words, which can lead to repetition like we just saw. High temperature, on the other hand, lets the AI get wild and creative by increasing the variance in the probability distribution, sometimes at the expense of coherence.

Let me show you what I mean. Here’s what happened when I cranked up the temperature to 1 with my GPT-2 model:

“You’re looking at them in the middle of the night, not really. You have to take that picture of a white supremacist in a robe holding a sign on the beach. It was an actual sign. What do you do when you see that sign? You can’t do that. So the first thing we did with our police officers is stop their guns with them. If you don’t kill somebody, they get you killed, but if they shoot you with their rifles, you shoot yourself.”

That was pretty dark. This is what happens when we let the AI get really creative. It’s picking up on Trump’s stream-of-consciousness style, but it’s going off the deep end.

For our final model, we’ll use a more moderate temperature to balance coherence and creativity. Now, let’s look at how our GPT-2 model with LoRA progressed during training.

After 50 epochs, it produced this: “”we’re gonna build a wall. ” this is a lie. it’s an attack on america, and i don’t know if it even works. and we’ll use it as a weapon against law enforcement. we’ll have it, we will have the wall, but we’re going to use the money and our military, our intelligence, all of it. we are going for a war on drugs, a major war. the drug war is one of the biggest economic problems”

We’re getting there.

These settings allow us to generate text that’s varied and interesting, while still maintaining coherence and staying true to the learned style.

Just for fun, I decided to ask it a couple questions after training it for 1000 epochs. I prompted: “What are your thoughts on free healthcare for all Americans?”

i have a lot of respect for you, but i don’t care what you think. we will always protect patients with pre-existing conditions and ensure equal access to care for everyone. there will be no discrimination. we will defend religious liberty and the right to keep and bear arms. and we’ll strike down terrorists who threaten our citizens and they threaten civil rights. i’ll never do that. our second amendment is under siege. you know that, right? and nobody talks about it, because nobody cares. nobody even talks. [applause] we’re going to defend the second and it’s under assault. it really is.

Classic Trump pivot, am I right? The AI has learned to hit Trump’s favorite talking points.

I even asked AI Trump what his thoughts were on Kamala Harris running against him, and whether he thought she would win.

Here’s his response: i don’t know, she’s a weak person. she doesn’t have the temperament to be president. but she can’t be trusted. so when you look at how she went, her numbers, you know what? she is weak. and i․m going to talk to her. if she gets the nomination, i will tell you. we“re going after the money. the rest of the stuff is going up. there s no doubt. they are going through the roof. look, we have a big problem with the drug war.

Now, as fascinating as this technology is, it’s crucial to consider its ethical implications. The ability to generate convincing fake speeches raises concerns about misinformation and the authenticity of digital content. As AI practitioners, we have a responsibility to use these tools ethically and educate others about their capabilities and limitations.

In conclusion, this experiment demonstrates the power of modern language models when combined with efficient fine-tuning techniques like LoRA. We’ve seen how AI can learn to mimic specific speaking styles with impressive accuracy. As we continue to push the boundaries of what’s possible with AI, it’s essential to approach these advancements with both excitement and caution.

If you found this interesting, please like for more deep dives into AI technology. Drop your thoughts in the comments – I’d love to hear your perspective on AI-generated content, the potential of techniques like LoRA and PEFT, and what you’d like to see next. Thanks for reading!